I’m going to present some loose notes that accompanied me in the production of some recent work.1 These have the perhaps frustrating feature of being almost overly focused on particular structures in the world that I don’t expect to constitute an interpretation of my exhibition More Heat than Light2. The following comes out of reviewing some of the ideas and constraints that arose during the work’s production and circulation. So there will be very little in the way of presenting reasons, methodological claims, justifications or even descriptions of the work, although a little of that. Instead I want to focus on a constellation of concepts and forms that I’ve had to deal with and which are leading me down some paths that remain unresolved. In fact, I want to constellate these concepts mostly around a single image.

First, here is the live feed from the thermal camera in the exhibition projected behind me. My initial interest in using this camera to document the work arose by way of my suspicion that traditional installation documentation was going to be unsatisfactory, as the work is particularly vulnerable to the static view that install shots provide of what’s on display. I wanted to make the instantaneity with which exhibitions circulate as images, presenting supposedly synoptic and more or less transparent access to ‘what was in the space’ a critical problem for More Heat than Light’s transmission. This kind of camera is used not simply for traditional security monitoring but for the monitoring of ‘core temperatures’ in production, to access otherwise insensible registers of potential organizational, mechanical or other problems on say a factory line or in a shipping center, and so is linked with an economy of means that privileges the terms of logistical efficiency and optimal functioning within production and circulation.

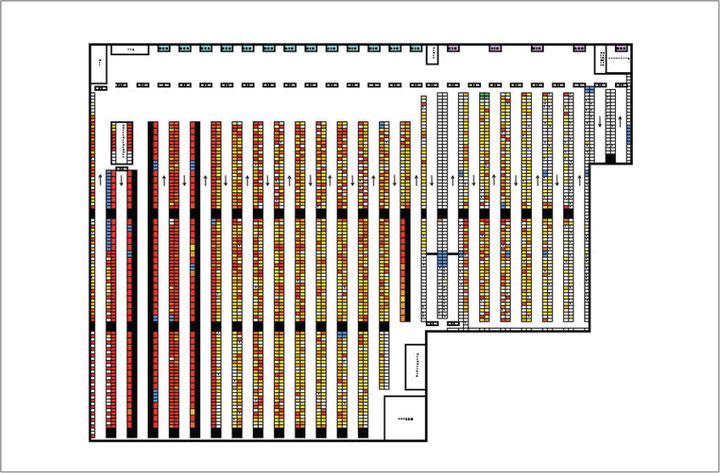

Here is this image [see on the upper left]. It’s taken from the brochure for a company that provides the service of assessing productivity in places like distribution centers.

This in particular is the pick system of one of these centers that they analyzed. The service consists in placing a thermal camera like the one in More Heat Than Lights on the shop floor to create a real-time record of activity that can be monitored. However, the ultimate goal is the recommendation of efficiency measures. Basically, the company ‘grades’ worker and mechanical performance on the level of shop organization. The company will leave the camera running for up to a year, focusing on the turnaround times of the place that’s hired them. The long tail data that is gathered from this is then translated into a gridded diagram of hotspots and un-utilized ‘cold’ pockets of the distribution/ production line. Those data are then imported into a CAD program, collated and superimposed to derive local averages of thermal activity. These averages are represented by the color gradients that the thermal camera uses as a metric. It submits and grades the whole organizational complex of bodies, hardware, the spatial diagram of the racks, the HVAC system etc. In short, the data retrieved from the process of monitoring and localizing regularities and tendencies within the gradient of thermal activity on the shop floor emerges by virtue of making those data into a grid, and then providing planning and efficiency recommendations based on that grid.

It is entirely imaginable that there are more and less fine-grained ways of depicting the averages within this image of the pick system. Grids are after all structures that map surfaces with infinitely expandable or retractable sets of units. The results could plausibly be complicated or simplified according to shifts in timescale, for example, or a further segmentation of the spatial diagram, or by accounting for different constraints. But this only after being submitted to an expansion or retraction of the grid, which remaps the physical planning of the slotting racks of a distribution center such as this one, or the rail-guided machines that transport cell bound matrices through an assembly line.

Grids define surfaces with sharp rectilinear cuts, producing general orthogonal continuity. But definition cedes to a function of control. As Bernhard Siegert has noted with regard to the cultural technique of the grid or lattice, “its salient feature is its ability to merge operations geared toward representing humans and things with those of governance.”3 Representation and control merge according to three basic features according to Siegert. 1) Bodies in three-dimensional space are located and given an address by being projected on a two-dimensional plane. 2) This address becomes freighted data, enabling that data to be retrieved and implemented according to their placement on the grid’s plane. 3) This functional placement and storage “operationalizes deixis”, in Siegert’s terms. Deixis means the binding of phenomena to a contextual matrix for its definition, thereby subjecting whatever the grid locates – which is seemingly anything whatever – to those processes of placement or ‘enframing’ (Gestell), storage and retrieval. The grid in Siegert’s account is a media form that operationalizes this type of contextual dependency, in the sense that it provides both the specific address – ‘here’ or ‘there’ or ‘you’ or ‘me’ – while also determining the location of all the other address’ that need to be drawn-on for the intelligibility of the location. This understanding of gridded space follows an essentially performative logic. The grid locates, stores, regulates and controls the phenomena that it puts in place – it is in the business of mastering the terms of its own discourse, which it set-up in the first instance.

A gradient on the other hand, understood physically, is defined first by an extremely granular transition between qualities. The variety or homogeneity of those qualities depend on a preexisting assemblage of environmental determinations, such as volumetric and dimensional mass, viscosity, environmental and specific forms of material resistance, i.e. as in the case of the thermal conductivity of glass versus a metal, determinations of convention and air flow. (This of course also functions for information where calculations based on restricted but essentially unpredictable sets of data function as a gradient of probability for various types of behavior or action. This goes for materialities that lack the exact address or locational information. Gamblers who can count cards use these kinds of probability calculations.) The simplest definition of a gradient is that it is a difference that can be measured across a distance. If this is right, it is possible to say that the difference consists in magnitudes of intensity, as opposed to scales of control as is the case for subjection to gridded space. Gradients are essentially contingent on material intensities of difference that retain a level of particularity even as, or especially because, their edges participate in transfers and displacements. The thermal mass of a concrete or oak floor will speed-up or slow-down the temperature of say a group of oversized heating circuits because it conducts heat at different material levels, which is further complicated by rates of heat dissipating into the cold sink of space.

Very generally, between the grid and the gradient there is – not really a dialectic – but at least a mediation, a dependency between the gradient’s intensive collision of particular structures, and the grid’s totalizing matrix, which allows the elements of gradation to be located, ruled and regulated. The thermal camera, for example, shows a temperature gradient, but the digital sensor that makes that visible to the human sensory apparatus is wholly the product of having cast a net over its focal range, formatted and segmented for the XY axis of a screen image.

A grid prescribes a place, while a grade describes a performance.

_____

Yet while a subject’s condition of emergence might be local to the performative space of a grid - prescribed a place by it – gradients have their own logic of emergence. Or to be more exact the reduction of gradients through entropic processes are what physics tells us are the cause for individuals to emerge with differentiated contours from circulating energetic forces within so-called non-equilibrium or dissipative systems.

Entropy processes are about gradient reduction. The claim that contemporary thermodynamics has made in the context of late 20th century ecology is this: nature doesn’t abhor a vacuum, it abhors a gradient.4 (This is the title of a paper by Schneider and Kay, 1989) The second law of thermodynamics, the law of entropy, is complicated and extended by this graded view of its operation. What is at issue is not so much the inevitable disorganization and diffusion of relatively closed mechanical structures – like 19th century heat engines and steam tables, or the statistically defined movement of gas molecules as models for planetary heat death or something like that – what becomes important is not a diffuse disorganization of previously ordered states, but rather the emergence of order that is attendant upon the path that leads from systemic breakdown and disorder to higher, more complex levels of organization. This resolves in what’s been called the 4th law of thermodynamics, which comes out of the work of Rod Swenson during the late 80s. It is also referred to as the Law of Maximum Entropy Production (LMEP). Its principal is described by Swenson in very basic terms under the heading “LMEP or Why the World is in the Order Production Business”, as such:

“Imagine any out of equilibrium system with multiple available pathways such as a heated cabin in the middle of snowy woods” (Swenson & Turvey, 1991). In this case, the system will produce flows through the walls, the cracks under the windows and the door, and so on, so as to minimize the potential. What we all know intuitively (why we keep doors and windows closed in winter) is that whenever a constraint is removed so as to provide an opportunity for increased flow the system will reconfigure itself so as to allocate more flow to that pathway leaving what it cannot accommodate to the less efficient or slower pathways. In short, no matter how the system is arranged the pattern of flow produced will be the one that minimizes the potential at the fastest rate given the constraints.”5

This 4th law tries to answer for the question as to why orders of energetic flow seem to keep emerging precisely at the site where order is supposed to be destroyed: where the dissipation of energy is taking place. The answer that physics gives is an opportunistic one, where the tendential law of energy diffusion over time is not simply countered by feedback loops (cybernetics), by negentropic pockets of temporary balance, settling on provisional equilibrium. Rather LMEP claims that energy takes the most efficient and opportune path toward dissipation. It postulates that the path itself is the organizational form emerging from the tendency to disorder. The path is formed opportunistically – on the back of some other environmental factor – and then becomes progressively determined. Taken on an ecological scale this is supposed to account for the massive, non-equilibrated complexity of nature. Swenson: “On the ecological view … we are productions (order) of a world that by its productions (order) produces more order (productions) ... Global evolution is an epistemic process by which new order is produced by hooking dissipative dynamics onto new higher order macroscopic invariants.”6

I understand the science here in really only the most cursory way. What I’m interested in has nothing to do with providing positivistic descriptions of emergence. I don’t describe my work in scientific terms, and the work doesn’t use those terms to describe other phenomena. It makes no pretense to be science, or to be about scientific findings.

But I am interested in the manner in which the concepts and language that structure the representational screen of scientific exposition are bound to a historical nucleus that reaches into other areas of application and experience. For instance, Catherine Malabou has discussed this reach in relation to the historical simultaneity of the emergence of de-centralized forms of neo-liberal control and the increasingly de-centralized picture of neuronal functioning from advanced neuroscience. With reference to Luc Boltanski and Ève Chiapello, we find a nice description of this mode of representational convergence:

“This is how the form of capitalist production accede to representation in each epoch, by mobilizing concepts and tools that were initially developed largely autonomously in the theoretical sphere or in the domain of basic scientific research … In the past it was true of such notions as system, structure, technostructure, energy, entropy, evolution, dynamics, and exponential growth.”7

What for a researcher initially stands in the place of scientific description becomes the prescriptive domain of how things ought to function because they seem to correspond with the most updated scientific picture of nature. Emphasis on emergence from gradient reduction, on spontaneous order from the efficiency of entropic processes, might suggest a familiar organizational form of social production and circulation that is tied to opportunistic efficiency measures drawn out of complex systemic interconnectedness.

The maximization of efficiency is nothing new in the sphere of production. It constitutes the basic impulse of both relative and absolute forms of surplus-value extraction. In terms of the exploitation of labor in commodity production for instance, efficiency becomes a kind of code word for either extensively maximizing the time of manufacture – extending work hours to meet projected average rates of profit - or in intensifying the automation of the production process itself through new technologies, to speed up the yield and turn-around time of the production and circulation of commodities.

Perhaps the most canonical example of efficiency planning is to be found in the Taylorized scientific management of the factory floor. Here gesture is streamlined so that ‘wasted’ or ‘superfluous’ gestures are eliminated from an increasingly mechanized labor process. In this example, the regulation of the time of manufacture is still bound to a corporeal, laboring body – a body whose powers appear as capital within the production process in so far as they form a closed circuit with the tools and materials they work on. This involves the predetermination of material and tool handling within production as a means of self-valorization.

But this old time-y Taylorization strategy for motion and efficiency planning (raising the tool like so, turning the crank thusly, etc.) radiates away from production proper toward the logistical realities of networked circulation, where global standards come up against local constraints in need of complex real-time management, to use the managerial patois. In the thermal analysis of the distribution center, motion planning in the form of reducing individually contained gestural atoms cedes to the plotting of more efficient paths on a larger organizational level. It becomes a matter of assessing total environmental control within a larger ‘ecology’ of controlled environments. The goal is to keep the ‘gradient reduced’, to eliminate potential movement and energy intensive patterns within any given environment for the planning of one streamlined path to the next – from one pick system to the next. The pick system of any given distribution center is one node in a massively complex supply chain. The role of efficiency in the turn-around time of circulation bears heavily on the reach of that path from systems monitoring in logistics to the associated cost-analytics of computing things like energy-prices, pegged to the spontaneous ideology of financial markets.

This is why companies like Amazon are so focused on promoting robot vision and motion planning, which follows algorithmic structures such as so-called ‘visibility graphs’ that are based on optimal path calculation – to say nothing of the elimination of wages and the potential for employees to com- plain about deplorable labor conditions. Amazon is not an arbitrary example, as they are the largest and most sophisticated user of real-time minute to minute tracking systems which include machines that measure packaging weight and assess if packers loaded the boxes in “the one best way,” as an article in Salon reports.8 The Taylorist management of energy expenditure in labor is broken down into sub-tasks where movement, shelving and packing of goods are measured against task deadlines down to the second. This includes manager scrutiny of the organization of bathroom breaks as they pertain to the physical organization of the shop floor, where – again as Salon reports – employees can be fired for taking the least efficient path to the bathroom. The ‘one best way’ is the organizational motto of labor management, where ‘way’ does not simply describe method, in the sense of ‘manner’, but ‘path’ in the sense of physical conduit. This puts the manager in the place of a Maxwell’s demon.

The manager constantly sorts through so-called ‘process maps’ like the pick-system shown in the image, which result from monitoring waste on the line, and serve as the basis for the continual opportunistic streamlining of the most efficient path for task organization. Constant streamlining means constantly removing constraints and therefore more effectively reducing the gradient of possible actions – so that a new more efficient order of work emerges by speeding up target times for each given task, grinding the organic composition of labor down to an inhuman temporality.

_____

So I think it is obvious that I want to think of grids and gradients not simply as formal tropes to be analyzed. Rather I want to understand the mediation between the grid and the gradient as paradigmatic – or specifically as a mediatic – determination of the deployment and analysis of a worldview. In other words, the mediation between the grid and the gradient is a productive mediation. I think this means that taken together, grids emerging from gradients offer epistemic handles on processes that organize social production.

Within my own immediate context of operation: Artworks being shuttled from institution to institution, from gallery to biennale to fair, are as much subject to the accelerated speed of the systemic grid of relations as the ships’ course that carries their crates, the warehouse organization that stores it, and even the map/app on my phone, sending me stumbling through a new or vaguely recollected city plan. I want to ask if this recursion of categories back into the context that they control might constitute the potential to develop a critical methodological stance from them.

But where to locate the ground of this stance when it comes to the circulation of artworks?

The historical project of site specificity, developing throughout the 60s and 70s and for several generations after, I think can fairly be characterized as an irrevocable bid to abnegate the ideological illusion of autonomy from art’s social, economic, political, geographic formation. However, this path toward locating the real conditions of production and distribution seems to have become the fully accommodated project of institutional practice and commissioning, municipal agendas, positioning the globetrotting artist as a global content production machine. What ironically seems to have resulted from the fall-out of site specificity is a universal artistic position. What manifests is particularly sited research on – sometimes genuinely – revealing or pressing data about a site, to be fed back into that site before the artist is flown in to the next location to do the same. The conditions of circulation seem to have superseded those of the specificity of any site whatever.

Thinking about this – and certainly my own implication in it – has led me to an attempt to develop a concept of the work’s place out of this question about how to localize the artwork in an increasingly distributed and abstract culture of control. This has something to do with the term ‘weak locality’ replacing the concept of site. Localization pertains to where one begins the process of thinking or acting on a certain phenomenon. But the beginning commences on the back of a moving train.

The exhibition space is not treated by More Heat than Light as a site to which the work is integrally tied, but as a locale whose specificity is attenuated by its standards of operation: its attachment to a grid of protocols of symbolic exchange with other institutions and cities, as much as the physical electrical grid, which itself is ruled as infrastructure by coded standards of electrical operation and environmental control. The work localizes itself in a manner that is weak enough to circulate – to a non-profit institution in San Francisco, an Airbnb in New York or a Kunsthalle in Europe – but which neither treats that site’s infrastructure as a neutral support for autonomous objects imported from their production context, nor makes a claim to site specificity on the level of centralizing the work’s production and meaning on the level of its presence to the immediate conditions of the site.

Rather, I want infrastructure to be reflected, thickened and delimited by the intervention of the work itself – introducing other gradients of possibility that reorder those standards by converting energy consumption from a lighting to a heating apparatus. Energy is localized in its generation and expenditure, but it has no site to which it properly belongs.

Weak Local Lineaments is the title that I give to these works. It sort of parodies a certain scientific language, but I don’t think it gives technical or transparent access to the work’s conceptual content. It does function for me in a heuristic manner – but again I presume no transparency in its use. This is especially since it verges on the edge of sheer nonsense – of a reflected struggle with description as a condition of the work’s place in the world. Weak locals are as weakly autonomous as they are dependent on the location that they operate and which temporarily houses them. ‘Weakness’ is not a pejorative term here, but an affirmation of the processes of potential decoupling and reorientation that bind a broader presentation of experience beyond the scope of any particular site.

Ultimately the question I want to ask myself is: How does the work push against the presentational limit of the concepts that determine it? The methodology, if there is one, is this: to build a structure up while tearing it down at the same time.

[1] The following is the transcript of a talk originally presented at the symposium “The Whole Cool System”, organized by Simon Baier at Eikones NFS Bildkritik, Basel, May 27th, 2016.

[2] Kunsthalle Basel, 1 April – 29 May 2016, https://www.kunsthallebasel.ch/en/exhibition/more-heat-than-light/

[3] Siegert, Bernhard, Cultural Techniques (Fordham University Press 2015), p. 97.

[4] “Nature Abhors a Gradient” is the title of a paper published in 1989 by Eric D. Schneider, and J. J. Kay.

[5] Swenson, Rod, “Spontaneous Order, Autocatakinetic Closure, and the Development of Space-Time”, in: Annals of the New York Academy of Sciences, Vol. 901, Issue 1, 2000, pp. 311–319.

[6] Swenson, Rod, “Autocatakinetics, Yes – Autopoiesis, No: Steps Toward a Unified Theory of Evolutionary Ordering”, in: Int. J. General Systems, Vol. 21, 1992, pp 207–228.

[7] Quoted from Malabou, Catherine, What Should We Do With Our Brain (Fordham University Press 2008), p. 41.

[8] Head, Simon, “Worse Than Wal-Mart: Amazon’s Sick Brutality and Secret History of Intimidating Workers”, salon, February 23, 2014, https://www.salon.com/2014/02/23/worse_than_wal_mart_amazons_sick_brutality_and_secret_history_of_ruthlessly_intimidating_workers/